It’s time to reboot your learning measurement

What's the current state of your learning measurement strategy? Have you taken an honest look at the way you're measuring success -- and perhaps determined it's time for a reboot?

If your L&D department has robust measurement practices, you may need to simply tweak your current approach. However, if you have not been measuring, or doing it half-heartedly, now would be a good time to get serious. In this new world, where the delivery options are limited, it is critical to know if your virtually delivered programs are increasing skills and advancing business outcomes. Not only do you need to know if online training is working, but if it is not, you need to understand why. Does a distracting home environment impede learning? Does Zoom enable or inhibit virtual networking? Are managers less likely to coach their employees when they are "out of sight and out of mind"? Enhance your good practices if you have them, and if you don’t, commit to ratchet them up.

Four steps to consider

If your measurement efforts need a reboot, here are four steps to consider:

- Remember the mantra: start with the end in mind. This means that before you design the program, clearly identify the ultimate outcome the program should impact (e.g. improved customer loyalty, accelerated innovation, enhanced employee engagement). These outcomes are the touchstone of your measurement efforts.

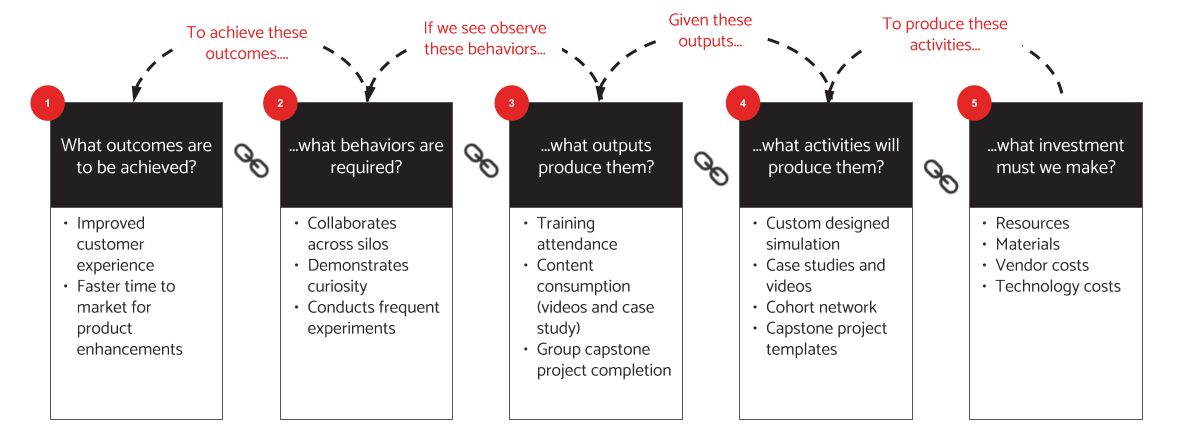

- Work backwards from the planned outcomes using a framework called a logic model. This process produces a graphic depiction that shows the shared relationships among the resources, activities, outputs, outcomes, and impact for your program.

- Develop your measurement plan. Document the data you need to gather and when to gather it (before, during, immediately after, 90-days post).

- Take a reality check. Can you get the business impact data with the necessary level of granularity to isolate the impact of training? If you cannot get the business data, then get the next best thing: behavioral improvement data supplemented by data from learning surveys.

The four steps in action

Let’s walk through each of these four steps using an example.

Step 1: Start with the end in mind

An organization has been losing its competitive edge due to delayed product enhancements and a poor customer experience when clients migrate to each new release. After an extensive analysis, the business leaders concluded that its designers lacked design thinking skills. The product development leaders felt that enhanced skills in design thinking would improve the customer experience and reduce time to market for product enhancements.

Step 2: Develop a logic model

The organization used this starting point to work backwards from the goal to the behaviors, to outputs, activities and ultimately the investment. Given the goals, the leaders decided to focus on developing three specific behaviors:

- Cross-silo collaboration: Lack of collaboration resulted in prioritizing enhancements that did not adequately address customer requirements. Leaders believed that organization-wide collaboration would produce more diverse ideas and innovative approaches to meet customers’ stated and unstated needs.

- Curiosity: The product leadership team recognized that their designers did not ask meaningful questions or explore creative customer solutions. Greater curiosity would enable employees to uncover new opportunities and would shift their focus from what was easy for them to what would most benefit the customer.

- Experimentation: Product development leaders felt that frequent, short experiments would not only accelerate learning but also help teams to quickly course correct and yield better results.

Next, the leadership team identified the outputs required to produce these behaviors. In our example, leaders agreed that all learners would attend training, consume content, and complete a capstone project.

Having identified the outputs, the leaders need to determine what activities would produce them. In this scenario, the organization had to develop a design thinking simulation, create videos, publish a case study, and design a template for executing a capstone project. The organization opted for a simulation because of its ability to develop the skills without in-person delivery.

The last step asks, what investment must leaders make to produce the learning activities? The investment would include resources, materials, vendor expenses, and technology fees.

The graphic below shows the high-level logic model for the end to end program.

Step 3: Create a measurement plan

Step 3: Create a measurement plan

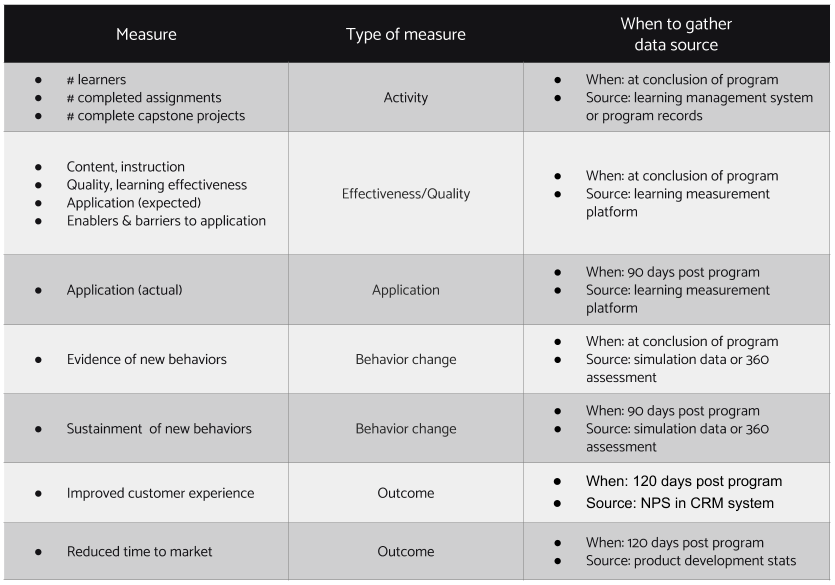

Having created the logic model, the business and L&D leaders needed to create a measurement plan. The plan below identifies what data they required and when they need to collect it. Note that the plan includes data for activities, quality, behaviors, and outcomes. These diverse measures connect the links in the chain and demonstrate how each link leads to the next.

Step 4: Take a reality check

The last step is the reality check. You know what data you would like to have, but realistically, can you get it? Data collection gets trickier with outcome data. This information lives in a business system, so, in theory it is available. However, the data also must be in a form that enables you to isolate the impact of training on these measures. In addition, with a long delay between program delivery and expected impact, isolation becomes even more challenging. If you either cannot get the outcome data in the needed form or cannot isolate cause and effect, then commit to gathering high quality data on behaviors as a proxy for business outcomes. In our design thinking example, the business leaders realized that isolating the impact of design thinking skills would be difficult due to the time delay and the fact that they were also changing their client engagement process. They concluded that if they received valid and reliable data from their simulations, they could conclude that the training was impactful.

While this process may seem time consuming, it has multiple benefits:

- It answers the question: can we get from A to B? It creates the logical flow from the program inputs with the links to the ultimate outcomes.

- It engages business stakeholders in the process and builds commitment to support the program and accomplish the desired outcomes.

- It focuses the effort and ensures that the program does not try to accomplish more than is possible with the available time, resources, and desired impact.

You are now ready to design the program and the methods to gather the data to evaluate program efficiency, effectiveness, and impact.

Peggy Parskey, Assistant Director, Center for Talent Reporting

Peggy Parskey, Assistant Director, Center for Talent Reporting

Peggy is responsible for building measurement capability of talent practitioners in the execution of Talent Development Reporting Principles. She is also a part-time staff member at Explorance, where she consults with HR and learning practitioners to develop talent measurement strategies, develop action-oriented reports and conduct impact studies to demonstrate the link between talent programs and business outcomes. She has more than 25 years of experience in driving strategic change to improve organizational and individual performance.

She has coauthored three books on Learning Measurement “Learning Analytics, Using Talent Data to Improve Business Outcomes” (2nd Edition with John Mattox and Cristina Hall, Kogan-Page, 2020), “Measurement Demystified” (with David Vance, ATD Publishing, 2020) and “Measurement Demystified Field Guide" (with David Vance, ATD Publishing, 2021).