Making the most of your learning data: turning insights into action

If you are a data analyst, presenting your findings is where your job often ends. You have told a compelling story that engages your audience. You have dutifully recommended next steps.

The client (who may be internal or external) thanks you, asks for the slides and says, “I’ll follow up if I have questions.” The client studies the slides and asks about a few specifics (“Why was result on slide 6 inconsistent with the data on slide 10?”). And then… silence.

What just happened? There are several reasons why results of well-crafted analyses do not go anywhere. One reason may be that organizational priorities have changed since you conducted the study. If your study took several months to complete, it is possible that the underlying business case is no longer a priority. If you analyzed the results of a multi-day in person leadership training program, COVID-19 may have made your results irrelevant.

A second reason for the silence could be that the stakeholders did not like the findings, conclusions, or recommendations. For example, in your leadership program analysis you reported that senior leaders had larger competency gaps than their direct reports. You recommended immersive training for senior leaders to quickly upskill and become role models for their direct reports. However, your primary audience for the findings are the very people who are accountable for acting on the results. And they do not believe that change starts with them.

A final plausible reason for non-use is that the end-to-end process (study design, implementation, and analysis) was not designed for use. In 1978, Michael Quinn Patton published a book called, “Utilization-Focused Evaluation”. This evaluation method focuses on supporting “intended use by intended users”. But to drive use, stakeholders must be engaged in the evaluation of the program and accountable for acting on the findings. When you ignore the taking-action part of the process, you may discover that important findings sit on a shelf gathering dust.

Optimize for action

Considering all the possible barriers to use, how can you maximize the likelihood that meaningful findings will drive action?

Consider reframing the findings. Instead of ‘what’s working?’ and ‘what’s not working?’ adopt action-oriented language. Of the findings, which are actionable now, which are not actionable now and which are ambiguous?

Then, for each type of finding, align your results with strategic priorities to create an urgency to act or monitor results to highlight potential downstream actions.

“Actionable now" findings

The first category of findings is “Actionable Now.” Findings in this category, if acted upon, will enable the organization to achieve its strategic goals or maintain its momentum. These findings should identify areas that need improvement as well as areas that the organization should sustain.

As an example, consider the leadership development program analysis we mentioned earlier. Your analysis identified that leader cohort groups were meeting monthly and exchanging best practices and challenges. Participants stated that the cohort communities provided significant value to their ongoing development. However, you also found that that business acumen modules were too generic and not tailored to the unique needs of each business unit. These findings are both actionable: the program designers need to support the leadership community to ensure its ongoing value for development and to customize the business acumen content for each business unit.

“Not actionable now" findings

The next category includes finding that are “Not Actionable Now.” Within this category, segment the results into two areas: those that are tied to organizational priorities and those that are not. In another example, let's consider an organization that conducted a program to develop data storytelling skills. This organization also had rigorous diversity goals to ensure gender parity in critical organizational capabilities. The analysis discovered no differences either in the gender composition of the courses nor in the level of competency gain. These findings did not necessitate any immediate action for the program owners. However, given the priority of gender parity, the program owners committed to ongoing monitoring to ensure these results would remain consistent as they deployed the training more broadly.

The next category includes finding that are “Not Actionable Now.” Within this category, segment the results into two areas: those that are tied to organizational priorities and those that are not. In another example, let's consider an organization that conducted a program to develop data storytelling skills. This organization also had rigorous diversity goals to ensure gender parity in critical organizational capabilities. The analysis discovered no differences either in the gender composition of the courses nor in the level of competency gain. These findings did not necessitate any immediate action for the program owners. However, given the priority of gender parity, the program owners committed to ongoing monitoring to ensure these results would remain consistent as they deployed the training more broadly.

This category, as the name suggests, does not require action, but in some cases, you should recommend that leaders monitor results to ensure that something doesn’t go awry when they are not looking.

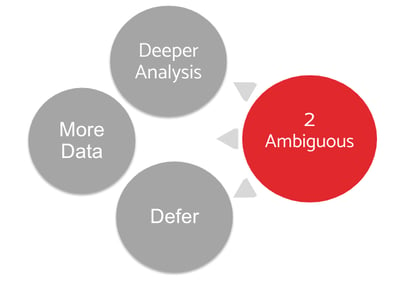

Ambiguous findings

The final category includes findings that are counter-intuitive or confusing. Why did your training program outperform in Asia but not in Europe? Why did your virtual programs have strong scores for content and instruction but a low Net Promoter Score?

When findings are not clear, you have three options: the first is to dig deeper into the data if you can. However, the deeper dive may not reveal the answers to your questions because you require other data (such as demographics) that you did not gather at the outset. Another option is to gather more or different data. Perhaps you require qualitative data from focus groups or interviews to understand the “why” behind the “what”. Or you need to conduct a targeted study to uncover why certain behaviors are not sticking in certain business units. Your third option is simply to put the results on the shelf and not pursue them.

When findings are not clear, you have three options: the first is to dig deeper into the data if you can. However, the deeper dive may not reveal the answers to your questions because you require other data (such as demographics) that you did not gather at the outset. Another option is to gather more or different data. Perhaps you require qualitative data from focus groups or interviews to understand the “why” behind the “what”. Or you need to conduct a targeted study to uncover why certain behaviors are not sticking in certain business units. Your third option is simply to put the results on the shelf and not pursue them.

Which option you choose depends on the importance of answering the question to the business. If your corrective action requires a clear insight, then, dig deeper or get more data.

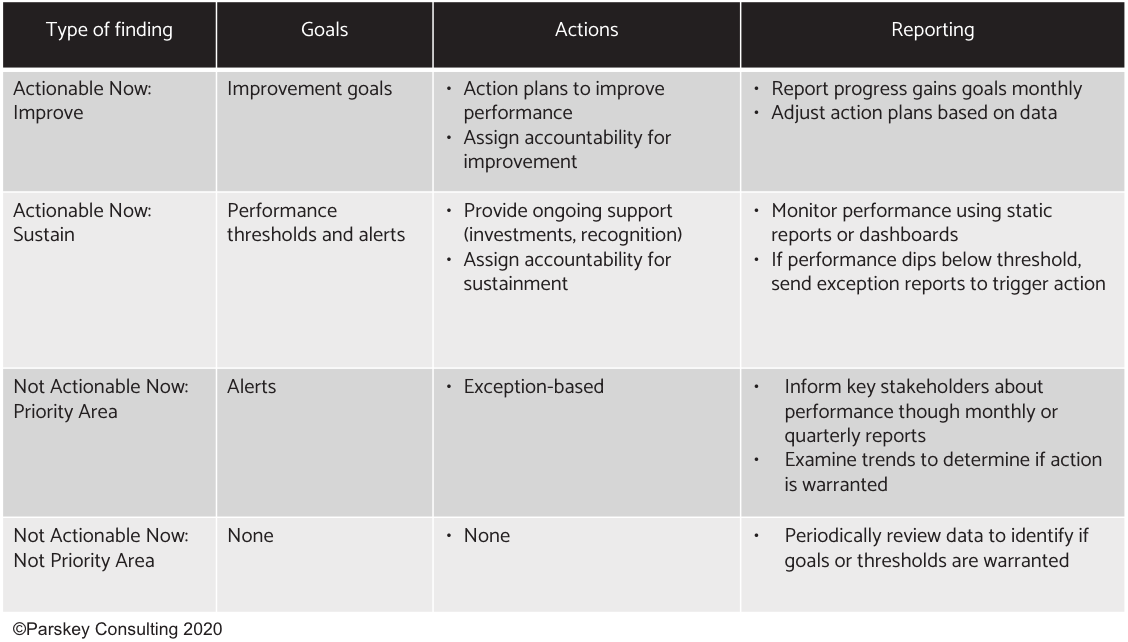

Action planning and reporting

At this point, you have solid recommendations, segmented into categories that clearly spell out “what’s next”. There is one last step and that is to identify how the organization should monitor and manage the agreed-upon actions.

The table below outlines how to turn your recommendations into ongoing monitoring and management of results by:

- Setting goals or performance thresholds based on the type of finding

- Taking actions relevant to each category

- Tailoring reporting to each category to ensure you match the right type and cadence of reporting to the result to be achieved

As you finalize your analysis findings for stakeholders, remember, the finish line is not the final deck, it’s getting the results used. Analysts talk about the “What?” and the “So What?”... Don’t forget about the “Now What?” Be clear and specific and you may well avoid the deadly silence that befalls many analyses.